The Great Vibepression

Why are vibes so bad when economic metrics don’t look that bad? Scott Alexander tries to find an answer to this question. Zvi too.

I have a great respect for Scott and Zvi. Their rationality and ability to deduce answers from the empirical data are important. However, I am not as rational as they are, so I can only talk about the “vibe” side of things.

Analyzing the “vibecession” —or the Great Vibepression—through the lens of empirical data presents a problem: data is necessarily historical, while the vibecession is about the future. The future is, by definition, uncharted and unquantifiable. One can only sense the future, and what we sense is impending doom.

I think it’s uncharitable to see “high” expectations of younger generations as the main reason of the vibepression. High relative to what? And high for whom? Older generations and the majority of participants of vibepression discourse had time. They had chance to show up, do their work, save money and slowly accumulate wealth. They had that kind of deal with the system. That deal is dead. Do I really have time on my hands? Considering the hyperobjects of our time, I need safety. Safety requires money. I can not defer this requirement to future. I am deeply uncertain about what future will bring.

Ukrainians had all their lives upside down in a day. The war has been going on for 4 years now. Israel hastened the genocide. They hit Iran multiple times. When the time comes they will hit again and probably harder this time. There were armed skirmishes between India and Pakistan. Thailand and Cambodia are once again on brink of war. USA abducted Maduro from Venezuela. They now threaten Cuba and Greenland. The world order was fairly successful to isolate conflicts, especially to Middle East. Now armed conflicts are in every continent. All countries are increasing the military spendings. One doesn’t need to be Jung to sense the incoming Great War.

Even if don’t start killing each other with high end technological weapons or exterminate ourselves with nuclear bombs, there is also AI on the table. Leaving the existential risk aside1, it’s clear that it’ll lead to end of most jobs. Maybe not in a year, maybe not in two but soon enough to know that I am going to face the consequences. I’m a software developer with 8 years of experience. I am confident with my engineering skills. I know that I’m in a very good position and most likely will be able to find a place for myself in the industry. However, I’m not sure if I want to be a mere project manager for AI agents.

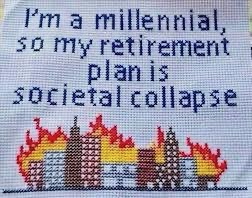

I am a socialist. This means I am ideologically invested in the idea of societal collapse because it might lead to the collapse of capitalism. I might be over-indexing these threats. Maybe everything will turn out to be great. But the world around me right now feels like a Nick Land wet dream. I want my life and I want it now.

-

Although even Sam Altman is shamelessly saying that “AI will most likely lead to the end of the world”. ↩︎